Vertica Parallel Load

As it is known there are 2 types of storage area in Vertica, WOS and ROS. Whereas WOS stores data in-memory, ROS stores data in-disk. If you are using COPY command without DIRECT hint, data is first loaded into the WOS area then Tuple Mover moves the WOS data to the ROS area. If you load big files, streams you can pass the WOS area by using DIRECT command and it improves the load performance.

Actually I do not want to mention about the DIRECT hint, I want to talk about the PARALLEL file load.

Think about you have a big file, more than 100 GB size and you want to load this file data into the vertica as parallel as possible. Where can you parallelise at ? File reading parallelism and loading parallelism.

With loading-parallelism you can modify the resource-pool configuration to increase or decrease # of slave threads by using EXECUTIONPARALLELISM parameter. If you set this value to the 1 just one thread executes the query causes to reduce the response times if you have multiple small queries. But it causes to long response times for long queries. For these types of queries you should increase the parameter value as well.

Another method is the main subject of this post, file reading parallelism. If you have multiple machine and one big file and you want to read and load this file to the vertica database in default you can use just one machine to read file.

COPY customers FROM LOCAL '/data/customer.dat' DELIMITER '~' REJECTMAX 1000 EXCEPTIONS '/home/dbadmin/ej' REJECTED DATA '/home/dbadmin/rj' ENFORCELENGTH DIRECT STREAM NAME 'stream_customers';

But if you have 4 machine and if you split-up that file into 4 pieces ( Linux Split, then distribute files across the nodes ) then you can load much faster than the previous method. With this method you should also specify the node name.

COPY customers FROM '/data/customer.dat' ON v_myv_node0001, '/data/customer.dat' ON v_myv_node0002, '/data/customer.dat' ON v_myv_node0003, '/data/customer.dat' ON v_myv_node0004 DELIMITER '~' REJECTMAX 1000 EXCEPTIONS '/home/dbadmin/ej' REJECTED DATA '/home/dbadmin/rj' ENFORCELENGTH DIRECT STREAM NAME 'stream_customers';

And also I experienced that if you put multiple files for each node it can load much much faster than the all methods. For example in above case we have 100 GB customer file and we split it into 4 pieces and then each file has 25 GB data. In addition to that if you split it again in each node and issue seperate COPY commands you can see the difference.

-- COPY STREAM 1 COPY customers FROM '/data/customer1.dat' ON v_myv_node0001, '/data/customer1.dat' ON v_myv_node0002, '/data/customer1.dat' ON v_myv_node0003, '/data/customer1.dat' ON v_myv_node0004 DELIMITER '~' REJECTMAX 1000 EXCEPTIONS '/home/dbadmin/ej' REJECTED DATA '/home/dbadmin/rj' ENFORCELENGTH DIRECT STREAM NAME 'stream_customers'; -- COPY STREAM 2 COPY customers FROM '/data/customer2.dat' ON v_myv_node0001, '/data/customer2.dat' ON v_myv_node0002, '/data/customer2.dat' ON v_myv_node0003, '/data/customer2.dat' ON v_myv_node0004 DELIMITER '~' REJECTMAX 1000 EXCEPTIONS '/home/dbadmin/ej' REJECTED DATA '/home/dbadmin/rj' ENFORCELENGTH DIRECT STREAM NAME 'stream_customers';

The Book: CentOS System Administration Essentials

Packt Publishing is the UK based tech book publishers which published the book in recently. I am proud of being one of the reviewer of this book.

This book is a guide for administrators and developers who develops application which run in CentOS. It covers many subjects that administrators and developers need to know work with Linux. And also it has some bonus topics like installation of LDAP, Nginx, Puppet etc.

The book is not useful the people who is the Linux newbie. There are many other books can be read for that purpose.

Besides, this book is my second book which I am the one of the reviewer. The first one is the Getting Started with Oracle Event Processing 11g.

Memorize Words

I have developed a simple GUI application in order to memorize words easily which was written in Python and wxPython. This application provides to build a dictionary from any delimited text file and then asks words from that dictionary. Application saves the true/false count for each word in specified dictionary and then it asks the words which is not learned yet. In next versions I want to improve the ask engine in order to ask proper words.

Application has two built-in dictionary English-Turkish, Spanish-English.

This version yes it is a release candidate but not release itself.

This application is really simple and small and not tested comprehensively. Any new feature requests, bug issues would be highly appreciated.

Github repo : https://github.com/afsungur/MemWord

Other explanations:

https://github.com/afsungur/MemWord/blob/master/README.md

Java Pipes vs. Sockets in a Single JVM

In a recent project I have implemented an InputStream in a java code. There are many ways to achieve this and in this blog post I will show off the performance of just two of them, Sockets and Pipes ( PipedInputStream and PipedOutputStream ).

Scenario is really simple, I send both 10 and 100 million messages from producer to consumer for each scenario. Message is just a text sentence, “This is a message sent from Producer”.

I run all the scenario for 3 times and then get the average of the response times. And also I used the PrintStream as data writer for both test and BufferedReader for reader.

Buffer values can be set for Pipes by passing the long value to the PipedInputStream constructor. And for socket, buffer can be set from BufferedReader constructor. Buffer value for this test is 1024*1024*8 for both scenarios.

Results:

| Pipe | Socket | |

| 10M msg | 12sec | 26sec |

| 100M msg | 72sec | 239sec |

The main code of the test:

public class PipeAndSocketTester {

public static void main(String args[]) throws IOException {

boolean autoFlush = false;

long messageNumber = 10000000;

PipedOutputStream pos = new PipedOutputStream();

PipedInputStream pis = new PipedInputStream(pos, 1024*1024*10);

new PipeWriter(pos,messageNumber,autoFlush).start();

new PipeReader(pis).start();

// after above code executed I remove the pipe code then execute follows

new SocketWriter(4544,messageNumber).start();

new SocketReader(4544,1024*1024*10).start();

}

}

}

Oracle Event Processing – Pattern Matching Example 2

In previous post I showed off one of the pattern matching feature of Oracle Event Processing and in this section I am gonna show another example of it.

There are many built-in functions which can be used in CQL. I think one of the most important these functions is the prev function. Prev function facilitates accessing the previous elements in the same stream/partition easily.

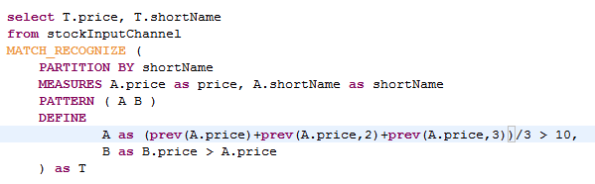

Consider this scenario, we have stock values as below and I would like to find out the pattern; firstly average value of previous 3 elements should be greater than 10 and the last value greater than the last value of the previous condition.

If we want to write to CQL code of our requirements it would look like:

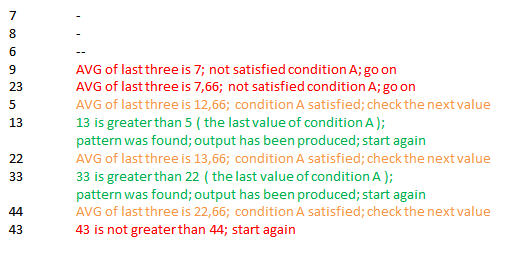

With numbers lets try to understand how the pattern can be matched ( left side: incoming events; right side: expression about pattern matching):

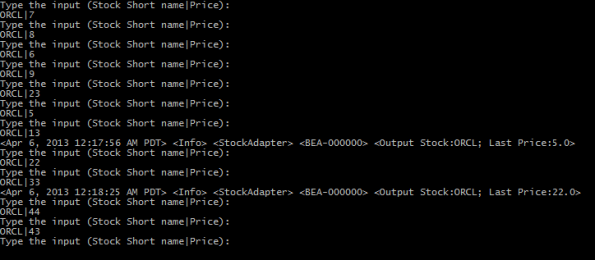

Run the application:

As shown in above, after the 13 and 33 pattern has been matched and output was produced.

Oracle Event Processing – Pattern Matching Example

Event processing technologies became famous in recent years. Companies realizes that they need to take real time action in order to satisfy customer requirements or handle several component issues happened in some internal system or advertising etc.

Oracle Event processing has several processing methods in order to process incoming real time data. In this blog post I will show off one of the pattern matching operations.

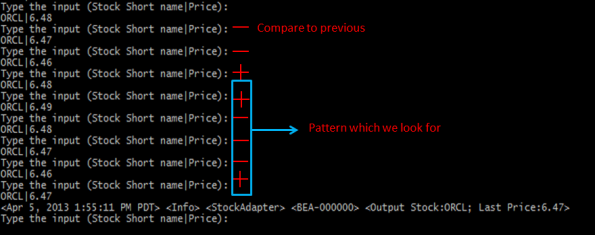

Consider, you desire to find out which stock firstly increases then decreases three times and increases again. For example;

6.48;6.47;6.46;6.48;6.49;6.48;6.47;6.46;6.47;6.46;6.45;6.44;6.43;6.42;6.43

In the above numbers we will find out the pattern which starts with 6.48 and ends with 6.47. Because it firstly incremented from 6.48 to 6.49 and then decreased three times ( 6.48,6.47,6.46 ) and then increased from 6.46 to 6.47 again.

I am using the Oracle Event Processing 11.1.1.7 for Windows 64bit and Cygwin as windows terminal.

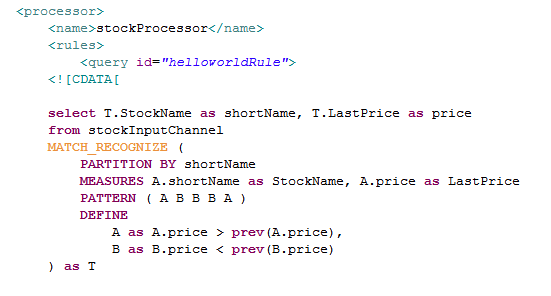

I changed a little bit the HelloWorld default cep application. The most crucial part is the CQL Processor part, how should I implement the CQL in order to find out the patterns?

Let’s look at the clauses in briefly.

StockProcessor is the name of the cql processor.

PARTITION BY shortName: Partition incoming data by shortName ( CQL Engines calculates each partition individually. For example, ORCL, IBM, GOOG prices are computed individually. )

MEASURES : Which values, fields will be in the output

PATTERN : Order of conditions ( Look at regular expression for more details )

DEFINE : Defining the conditions, “A as” means the define condition A.

Run the application and type the stock name and prices as in below:

Just after pattern is matched the output event is produced and in this example I just printed the name and last price value of the stock.

I didn’t specify any performance measurements but if the number of distinct stocks are increased performance will be our first concern. However, I would like to share some performance results in a another blog post later.

The Book: Getting Started with Oracle Event Processing 11g

Packt Publishing is the UK based tech book publishers which will publish an expected book in a few days.

Besides, this book is the my first book which I am the one of the reviewer:

Getting Started with Oracle Event Processing 11g

The one of the authors is the Robin J. Smith who is a great and much more experienced IT man who I met in the Istanbul at a lunch. The people who are looking for a book which tells the concepts of the Event Processing with really good designed examples and includes much more detail about the Oracle Event Processing 11g product; this book is definitely for you.

Using Variable Based Hints in an ODI Knowledge Module

In an ODI interface we know that a knowledge module should be chosen. This knowledge module lines up the steps which are executed in order. And also we can add “Options” and “Checks” to make a knowledge module more generic. For more information click here to view documentation . In the knowledge module steps we have been using Oracle sql statements and and also sql hints has been used. How can we implement the hints in the sql statements which are used in the knowledge module?

Since many interfaces use the same knowledge module, it should be generic.

If we add hints directly into the knowledge module as in the below we should prepare a new knowledge module for each interface and as you’ve predicted this method a little bit creepy.

Instead, we can add KM options to that knowledge module as my mentor have explained in his blog, http://gurcanorhan.wordpress.com/2013/02/05/adding-hints-in-odi/ .

That method is really useful when you need to use SQL hints in your sql statements. But we thought that we can enhance a little bit more to make this method more practical. Consider that if we want to change the hint option value such as increase the parallel degree of select statement, we should change the option in the interface. Even if your production environment has just execution repository, you could not do that.

Gurcan and me thought about it and developed a new method to make the parallelism more generic. In a database table we will store the hints of each interface. Consider a knowledge module, its name is “INCREMENTAL UPDATE” and it has 10 steps, totally 15 dml statements. Each dml statements contains hint clauses.

This kind of database table like this:

For example the TEST_INTERFACE interface uses the “INCREMENTAL UPDATE” knowledge module and this knowledge module has 9 dml statements which can contain hint clause.

The problem is how to access this database table and how to use hint clauses in knowledge module steps.

We know how to access the database in a knowledge module step but how to assign hint clauses which are in the database to java or jython variables.

Step1

Add a step into knowledge module to get the hint clauses from the database. Name the step as “Get Hiints”.

Step2

Select “Command on Source” tab and in the text area we should write the sql statement to get hint clauses.:

The sql statement which I wrote seem a little bit complex but it is not. The point is you should get just ONE ROW with these sql statement however there may be multiple columns it is worthless. In fact, the sql query should return like this row:

The other important part is we use the

snpRef.getStep("STEP_NAME")

method to use interface’s name, we need to this clause because just before when an interface starts to run hints read from the database table by using interface name.

Step3

After you’ve completed the query in the source tab, select the target tab and configure the java variables as shown in the below:

We define 9 java variables and each of them contains a hint clause, they are read from the database and assign to the java variables. Pay attention here,

String insertFlow_insertHint=”#HINT_1”;

It means that read the “HINT_1” field (column) of the sql query and assign it to the “insertFlow_insertHint” java variable. Since there is a sql column to java variable assignation, the sql query should not return more than one row.

Step4

Now, we have the hint clauses in the java variables the last point is how to use java bean variables in a sql statements.

In a knowledge module step you can use the java variables as shown in the above.

In this blog post I tried to tell how to use variable based sql hints in the ODI knowledge modules. Let’s discuss the things which can be more generic!

Coherence Index Performance

In a traditional database we use the index to access filtered data as quickly as possible. In the coherence, indexes are used for same purpose as well, fast access to filtered data. If you wish to retrieve all the items in the cache, likely index are not appropriate for you same as when you use database index. For example, when using the Oracle database and if you use a filter to retrieve data but its selectivity is close to 1, Oracle cost based optimizer can choose to not use the indexes because it’s not necessary since each index operations requires one more I/O. Likewise, in coherence when you wish the get data you should decide correctly whether use it or not.

Anyway, in this blog post I will carry out basic filter operations with and without index.

NamedCache cache = CacheFactory.getCache("persons");

PersonGenerator pg = new PersonGenerator();

cache.putAll(pg.getRandomPeople(2000000));

System.out.println("Cache loaded:"+cache.size()+" elements.");

Filter filter =

new OrFilter(

new AndFilter(

new EqualsFilter("getFirstName", "Hugo"),

new GreaterFilter("getAge", 50)

),

new AndFilter(

new EqualsFilter("getFirstName","William"),

new LessFilter("getAge",50)

)

);

Firstly, I connected to the cluster and cache, after that 2 millions of people object is generated, they all put into the cache. Then, filter is created, pay a little bit more attention to here, uppermost the orfilter was used and two and filters was used in the filter. If we convert this filter clause to an sql query it would be :

select * from people where ( firstName="Hugo" and age>50 ) or ( ( firstName="William" and age<50 )

As it is seen here, we are just looking for the firstname and age fields for filtering. If these fields can be used in indexes we could improve the query performance.

To get filtered data we use the entrySet method by passing the Filter object as argument like this:

Set filteredData;

Timer.start();

filteredData = cache.entrySet(filter);

Timer.stop();

System.out.println("Without Index:" + Timer.getDurationMSec());

It prints:

Without Index:12091

Time is milliseconds based.

As you know we don’t use index so far, it is the time to add one:

cache.addIndex(new ReflectionExtractor("getFirstName"), false, null);

Timer.start();

filteredData = cache.entrySet(filter);

Timer.stop();

System.out.println("With Index:" + Timer.getDurationMSec());

AddIndex method has been used to add index and first parameter of this method is the field’s get method which will be indexed. Second parameter gets a boolean variable and it determines the whether index is sorted or not. Sorted index is useful for range queries like ” age > 20 ” . While creating this index we did not use sorted index because we just looking for equalities, but we’ll use sorted index in the next one.

This code part prints:

With Index:5300

As you see, with one index, entrySet method double times faster than the previous one, without index.

We know that our filter uses two fields, one of them is the firstname which was just before indexed and the other one is the age. We can use age field as well in the index:

cache.addIndex(new ReflectionExtractor("getAge"), false, null);

Timer.start();

filteredData = cache.entrySet(filter);

Timer.stop();

System.out.println("With Two Indexes:" + Timer.getDurationMSec());

It prints:

With Two Indexes:1017

In order to get the filtered data set faster we can use the indexed object fields which are used in the filter. If we index the fields which are not used in the filter we won’t get any performance improvement.

cache.removeIndex(new ReflectionExtractor("getFirstName"));

cache.removeIndex(new ReflectionExtractor("getAge"));

cache.addIndex(new ReflectionExtractor("getCitizenNumber"), true, null);

Timer.start();

filteredData = cache.entrySet(filter);

Timer.stop();

System.out.println("With Index BUT Not in the filter:" + Timer.getDurationMSec());

It prints:

With Index BUT Not in the filter: 11391

11391 milliseconds is almost same duration of without index.

TROUG – Coherence Presentation and Demo Video

Yesterday, I attended Turkish Oracle User Group ( TROUG ) event as a speaker in Bahcesehir University, Istanbul. I talked about cache concept, why do we need to cache and a few Oracle Coherence features. And also I mentioned Coherence Cache topologies such as Replicated Cache, Distributed Cache and Near Cache and also Write,Read Through, Write Behind Queue and Refresh-Ahead mechanisms.

It was delighted but the end of the my presentation I was disappointed with my VirtualBox. When I wanted to make a demo in my Eclipse which runs inside the OEL 5 in VirtualBOX 4, there was something wrong. Some of my Eclipse Launch Configuration have been disappeared in “Run As” section so there was lots of configuration and I lost all of them.

Fortunately, I decided to upload a video which tells the some of Oracle Coherence features and CohQL examples inside the VirtualBox.

You can watch this video in vimeo: